If you develop software that runs on Kubernetes, a local development cluster can significantly speed up your development iteration speed, because everything happens locally. I discuss the pros and cons of local clusters and then present the tools Docker for Desktop, Rancher Desktop, kind, k3d and Minikube, and how they compare. I finish with details regarding how to get locally-built images into the cluster, and how to get the Ingress to work.

Kubernetes development tools series

This article is part of a multi-part series about Kubernetes development tools:

– Local Kubernetes development clusters (this article)

– Kubernetes cluster introspection tools

– Development support tools

Introduction

For us developers, the tooling is very important. It helps us to stay productive. Modern IDEs (such as Microsoft’s Visual Studio, VS Code, or Jetbrain’s IntelliJ-based IDEs) not only offer efficient code navigation, but they also let us easily attach a debugger, display logs, or inspect the contents of our local database, all without having to go through a complicated setup.

With the advancements of how software is deployed, the ways how we develop software also needed to change, to stay (somewhat) “in sync” with how software runs in production. Some 10-15 years ago, we used to copy compiled application bundles (such as jar files or other binaries) onto production servers. Development happened “natively” on our development host OS, where we installed compilers, language runtimes and frameworks. Then the shift to (Docker) containers and images happened, with IDEs adapting as well, giving us the ability to develop inside a container. With Kubernetes having become “mainstream” since a few years for many projects, developers need to adapt again, learning new tooling. As you can also see in the Development support tools article, IDEs alone no longer suffice – additional (external) tools are needed.

One such “tool” is a development Kubernetes cluster, which is now a basic requirement for developing Kubernetes-based applications. This article presents several alternatives for locally-installable Kubernetes clusters. Installing them is quite easy, as they all offer streamlined installation routines.

Why use a local Kubernetes development cluster?

Many developers I talked to use remote development clusters, often set up by their organization’s platform team. So why should you consider using a local cluster instead? Let’s look at the pros and cons:

Clusters

Let’s take a look at the most streamlined and popular local clusters.

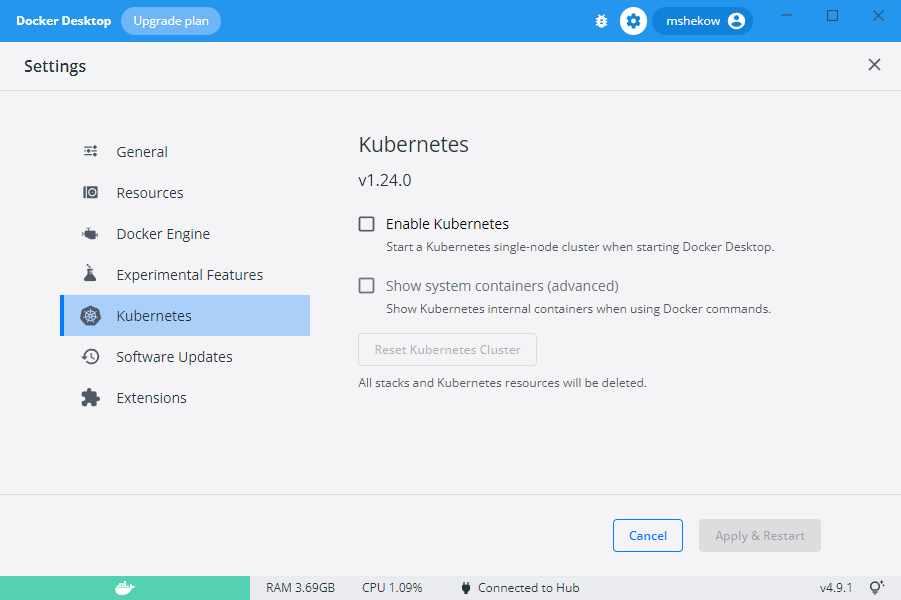

Docker for Desktop

Docker for Desktop comes with a simple, single-node Kubernetes cluster that you can start or stop from the Docker for Desktop GUI.

Advantages:

- Easy to install via a button click

- Ports for Services of type

LoadBalancerare automatically published on the host (no manual port forwardings necessary) - Docker images built on the host are automatically available inside the cluster

Disadvantages:

- No control over the Kubernetes version

- Only available on Desktop OSes (Windows, macOS, Linux), it cannot run in a CI pipeline.

- Only lets you run a single cluster at a time (which becomes problematic once you develop multiple K8s-based projects in parallel)

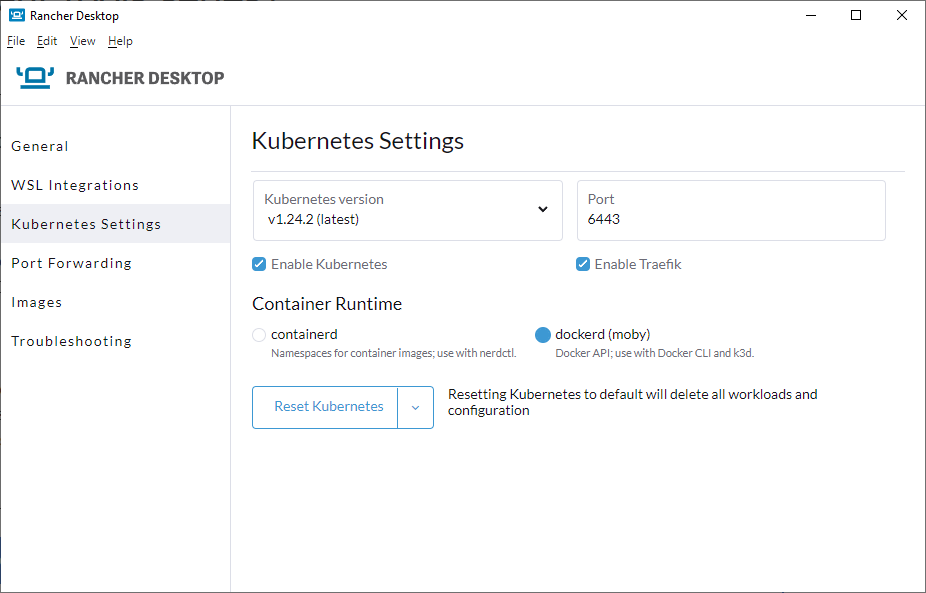

Rancher Desktop

Rancher Desktop is a free and open source replacement for Docker for Desktop, available on Windows, macOS and Linux. It includes two container runtimes (moby and containerd). Rancher Desktop offers a single-node Kubernetes cluster. It comes with a simple-to-use installer and a simple GUI.

Advantages:

- Easy to install

- Lets you choose / switch between many Kubernetes versions

- Host port-mappings are easy to configure, via Rancher Desktop’s GUI

- Docker images built on the host are automatically available inside the cluster

Disadvantages:

- Only available on Desktop OSes (Windows, macOS, Linux), it cannot run in a CI pipeline.

- Only lets you run a single cluster at a time (which becomes problematic once you develop multiple K8s-based projects in parallel)

kind

kind is a lightweight CLI-based tool that runs single- or multi-node clusters. It requires a Docker(-compatible) engine installed on your host.

Advantages:

- Easy to install via many package managers

- Excellent documentation

- Lets you choose / switch between many Kubernetes versions

- Supports running one or more clusters in parallel (but only single-node clusters can be paused/resumed – you always have to delete and re-create multi-node clusters)

- Also usable in CI -> you can use the same approach to set up a cluster in CI and for local development clusters, and it will behave exactly equal in both scenarios.

Disadvantages:

- To use Services of type

LoadBalancer, the setup is more complicated, because you need to install MetalLB yourself (docs) - To use your own Docker images in a kind cluster, you have to push your self-built images from the host into the cluster, which slows down the development iteration speed a bit

- Does not come with a local image registry, but there is a helper script to set one up (docs)

k3d

The underlying technology of k3d is k3s. k3s is a lightweight, production-grade Kubernetes distro which you could run directly on your host if you are on Linux. k3d is a cross-platform CLI wrapper around k3s, which lets you easily run single- or multi-node k3s clusters. It requires a Docker(-compatible) engine installed on your host. People have reported that k3d also works with Podman v4 or newer, or with Rancher Desktop with the Moby runtime, with some smaller caveats.

Advantages:

- Easy to install via many package managers

- Excellent documentation

- Lets you choose / switch between many Kubernetes versions

- Supports running one or more clusters in parallel (supports resuming single- and multi-node clusters)

- Also usable in CI -> you can use the same approach to set up a cluster in CI and for local development clusters, and it will behave exactly equal in both scenarios

- Built-in virtual load balancer -> no extra set up needed to expose

LoadBalancerservices - Built-in local image registry (as a means to get images into the cluster)

Disadvantages:

- To use your own Docker images in a k3d cluster, you have to push your self-built images from the host into the cluster, which slows down the development iteration speed a bit

Minikube

Minikube is a CLI tool that runs single- or multi-node clusters on top of various virtualization technologies, such as VMs or Docker.

Advantages:

- Supports many virtualization drivers, e.g. VMs (Hyper-V, VirtualBox, …) or Docker (see list)

- Lets you choose / switch between many Kubernetes versions

- Also usable in CI -> you can use the same approach to set up a cluster in CI and for local development clusters, and it will behave exactly equal in both scenarios

- Offers many add-ons which let you install third-party components with a single command (which you would otherwise have to install manually, sometimes using several complicated commands). Examples include Kubernetes Dashboard, Istio service mesh, metrics server, a local registry, or the Elasticsearch-stack

Disadvantages:

- Slower start-up time, compared to kind or k3d

Comparison table

| Docker for Desktop | Rancher Desktop | kind | k3d | Minikube | |

|---|---|---|---|---|---|

| Supports multi-node clusters | ❌ | ❌ | ✅ | ✅ | ✅ |

| No copying of locally-built images into cluster is required (faster) | ✅ | ✅ | ❌ | ❌ | ❌ |

| Choosable Kubernetes version | ❌ | ✅ | ✅ | ✅ | ✅ |

| Works in CI | ❌ | ❌ | ✅ | ✅ | ✅ |

| Built-in load balancer (for multi-node setups) | not applicable | not applicable | ❌ | ✅ | ✅ |

| Requirements | Desktop OS | Desktop OS | Docker daemon socket | Docker daemon socket | Docker daemon socket, or hypervisor |

Getting images into the cluster

To test your local code changes, your cluster must be able to access the (locally-built) Docker/OCI images that contain your code. There are several ways to achieve this:

- Push the image to a remote registry: you have to configure the registry’s credentials in your local cluster, so that it can pull images

- Push the image to a local registry: a local registry is usually not access-protected, but it must be accessible (TCP/network) from both the (Docker) build engine (when pushing) and from within the cluster (pulling)

- Load the image into the cluster’s container runtime directly (in case the cluster uses a different container runtime than the one you used to build the image) – kind, k3d and minikube offer shell commands to load the image into the cluster’s container runtime

Performance-wise, the third approach is often slower than the other two approaches. Since pushing to a local registry is faster than pushing to a remote registry, this makes the second approach the most performant one (at increased setup costs).

The third approach has another caveat: it only works if the container runtime does not attempt to pull the image. Pulling it would fail, because pulling always requires a registry (that is, the images cached locally by the container runtime are ignored). Thus, if your Kubernetes workload objects (e.g. Pods) specify a imagePullPolicy: Always, it won’t work. It also won’t work if your workload object does not specify an imagePullPolicy at all: in this case, Kubernetes uses the default value, IfNotPresent. That sounds good (“use cached images if available”), but if your referenced images has the “:latest” version tag, this triggers special behavior where Kubernetes attempts to pull the image regardless of the imagePullPolicy, ignoring the IfNotPresent default value (see docs).

Getting Ingress to work

Often you want to access HTTP-based services from the browser running on your host. Although you can use kubectl port-forward to punch holes into your cluster, you may also want to use the ingress of your local cluster, which is typically something like an Nginx or Traefik that comes with a Deployment and a Service of type LoadBalancer. The instructions of how to get this working depend on the local cluster you are using:

- Docker for Desktop: because Docker for Desktop automatically publishes ports for services of type

LoadBalanceron the host, following the official instructions (e.g. for Nginx see here) will only work if the ports (typically 80 and 443) are not already in use on your host. At least on Windows, however, it is very likely that such ports are already in use. The work around (for Nginx) is to simply download thedeploy.yamlfile mentioned in the official Nginx instructions, and modify the the ports (80 and 443) to something that is not yet in use on your host (see also here), thenkubectl applyyour locally-modified copy of the yaml file - Rancher Desktop: services of type

LoadBalancer(such as the Ingress) are automatically given an IP reachable from the host. If necessary, you can configure port mappings in the GUI of Rancher Desktop. - kind: use kind’s

extraPortMappingconfig option (docs) - k3d: As explained in the official docs here, simply add the following to your

k3d cluster createcommand:-p "8081:80@loadbalancer". You can now access your ingress on port 8081 on the host - minikube: use

minikube tunnel, which establishes a temporary load balancer, providing an external IP to Services that are oftype: LoadBalancer(whose external IP would otherwise remain pending)

Multiple Ingress hosts on a local machine

When you deploy Ingress objects in your cluster, an optional field in the YAML manifest ishost: some.dns.name.or.ip

which lets you control the redirection behavior. Some tutorials tell you to put some fictional hostname into that host field and then modify your /etc/hosts file (or %windir%\system32\drivers\etc\hosts on Windows), adding a new line such as “127.0.0.1 fictional.host”).

An alternative to this approach is to use wildcard DNS services like nip.io where a host such as app.127.0.0.1.nip.io resolves to 127.0.0.1.

Conclusion

Assuming you have sufficient memory, local Kubernetes clusters can significantly speed up your development iteration speed, because everything happens locally. To choose the right one, you need to consider your requirements, such as:

- Do you need a multi-node cluster? If not, Rancher Desktop or Docker for Desktop are the fastest option, because you do not have to push locally-built images into the cluster

- Do you need to control the Kubernetes version? If so, Docker for Desktop is not suitable.

- Do you want to use the same test cluster in development and in CI? This would allow you to run automated CI tests locally as well.

- Which development tools (such as Tilt or DevSpace) do you want to use? How well do they support your chosen local cluster? I present these tools in part 3 of this article series.

Which local cluster do you use? Let me know in the comments!