On this page you will find projects I’ve worked on in the past and at present. They are divided into several sections:

Hobby projects

Kubernetes operator for Azure Pipelines agents (since 2023)

My open source Kubernetes operator manages elastically scalable agents for the Azure Pipelines CI/CD platform. As explained in this blog post, Azure Pipelines lacks an official, stable solution for elastically scaling agents that are fully customizable. The operator’s key features are “scale to zero” (which saves CPU & memory resources and therefore reduces infrastructure costs) and “dynamic sidecar containers”, as an means to run container-based jobs as part of your pipeline. The operator also solves the core problem of missing storage persistence when scaling agent pods to zero, by letting you define persistent, reusable cache volumes that are mounted into the Kubernetes Pod.

Focus time (since 2023)

If you have a hard time getting your work done on your PC or Mac because of the constant interruptions, then this app is for you. Focus time is a free desktop app that runs in the background and regularly checks your calendar for planned focus time blocker events that you created (e.g. in Outlook). Whenever such a blocker event starts or ends, the app turns the focus mode of your operating system on or off accordingly, or runs any other commands that you specify (e.g. to close specific apps, like Microsoft Outlook or Teams). Focus time also lets you start (unplanned) ad-hoc focus time session, creating a focus time blocker event in your calendar that starts now and ends in N (configurable) minutes. You can also stop an ongoing focus time period early at any time, which shortens the calendar event accordingly. I discuss the app further in this blog post.

Directory Checksum (since 2022)

The Docker build cache avoids rebuilding those parts of a Docker image that were already built. Unfortunately, cache misses are hard to debug. As further explained in this blog post, one reason for cache misses is that COPY or ADD layers are rebuilt because of files that have changed since the last build. To diagnose the exact files, I developed a CLI tool called directory-checksum. It recursively computes the checksum of the contents of a directory, and prints them, up to a depth you can specify. By adding it to your Dockerfile, you can compare its output of two docker build executions to determine the files causing the cache miss.

Power Automate Flow for Outlook calendar sync (since 2022)

Having multiple calendars that are not synchronized often causes scheduling issues, because of overlapping events. To solve this problem, I developed a Microsoft Power Automate flow that synchronizes two Outlook calendars. In this blog post, I explain the approach, and how it evolved from previous iterations (including their shortcomings). The Power Automate flow can be downloaded from GitHub here. There is also a pseudo-code that you can use to build your own solution that is not based on Power Automate.

Maloney Fetcher (since 2021)

Die haarsträubenden Fälle des Philip Maloney (website) is a Swiss-German radio play in which a private investigator has to solve murder mystery cases. The author, Roger Graf, managed to make it very entertaining, thanks to absurdly weird characters and plot lines. Listening to the 15-25 minute long episodes is a fun (rather than nail-biting) experience! As further detailed in this blog post, I wanted to build an extensive archive of all episodes that should be free of duplicates. To achieve this, I built a Python application which fetches Maloney episodes from YouTube and the SRF 3 radio station, and attempts to detect duplicates using an acoustic fingerprinting library. The code is available on GitHub here.

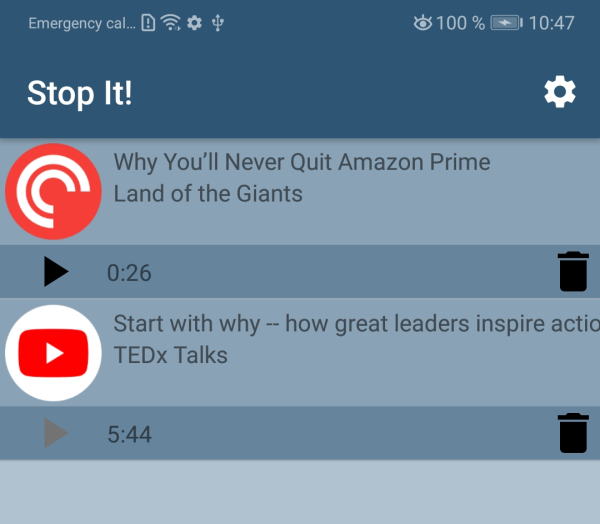

Stop It! (2020)

Have you ever wondered why there are bookmarks for books or websites, but not for audio tracks? Stop It! is an open source Android app that was born from a personal need: the ability to quickly create bookmarks for audio tracks on the go, without any hassle. Stop It! lets you create and manage audio bookmarks for any Android audio app. You simply need to pause and immediately un-pause the playback, e.g. using your headset controls. Stop It! will create a bookmark that contains the audio player app’s identity, the name of the track and artist, as well as a precise timestamp. This is invaluable for note-taking, as you can quickly navigate back to the podcast episode (or whatever you listened to) to the exact time stamp of interest. For selected audio apps, resuming playback is fully automatic. Stop It! works on Android 5 and newer.

Work projects (Fraunhofer FIT)

MAKSIM (2019 – 2020)

The goal of the MAKSIM research project (funded by BMWI) is to help electricity network operators to better plan the maintenance and renewals of their power grid equipment which is installed in the field. Because existing measurement equipment is extremely costly, the premise of this project is that low-cost, MEMS-based sensors can provide a similar output quality. Together with our partners we installed sensors and a gateway box in dozens of power grid stations. The box includes a LTE-router and a Raspberry Pi based industrial PC. During 2019 – 2020 I developed the client- and server-side software to safely receive, compress, cache, transmit and store collected sensor data for further processing. This included automatic provisioning of client-updates from the server, and integrating several server-side technologies, such as Django and Elasticsearch for data visualization.

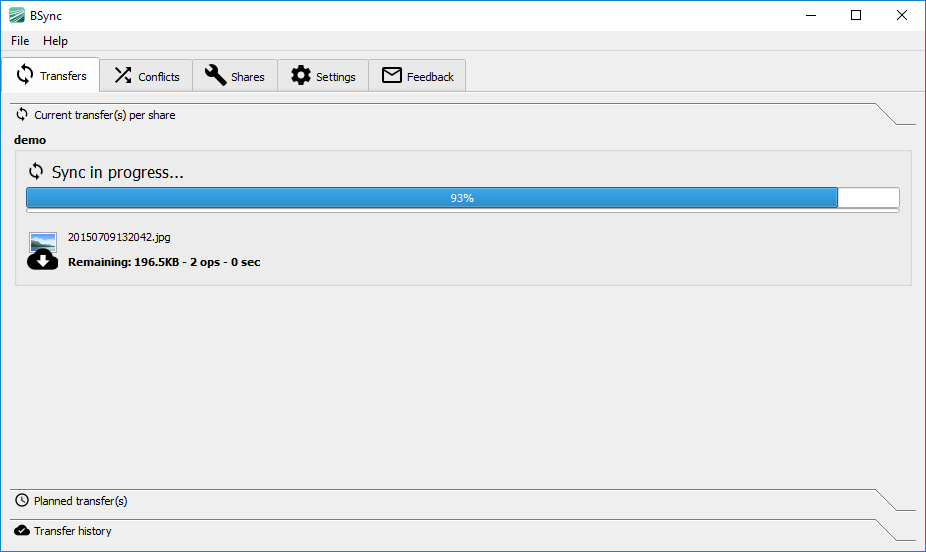

BSync (2014 – 2022)

BSync is a consumer-oriented file synchronizer for macOS and Windows that keeps several local folders synchronized with a cloud storage. In contrast to similar tools like the Dropbox or OneDrive client, BSync was created from an academic background, as part of my PhD dissertation. I applied elaborate algorithms to solve edge cases most other tools did not care about (but should have). BSync had better support for users who work offline for extended time periods. This allows many operations to accumulate or conflict. BSync always propagates the detected operations without side effects, avoiding conflicts whenever possible, and notifying users about real conflicts which BSync solves preliminarily. A designated part of the user interface shows a log of conflicts to the user and explains them in simple terms, to facilitate the recovery process. In addition, BSync’s architecture abstracts the file system specifics of different cloud storages, which allows to add support for new providers with little effort.

Mobile 360 (2014)

In 2014 I helped develop a smartphone app internally called “Mobile360” that was used in an experiment to determine whether end-users would accept contextualized advertisements presented via push notifications. The app would continuously run in the background on the phones of volunteers who gave their consent (mostly students and employees of our client). I implemented a context matching engine for Android that collects various inputs, e.g. current location, activity such as walking vs. standing still, display state, ringer volume settings, proximity, or the current time and date. The input values are matched against a set of contexts, which are regularly retrieved from a server. This allowed to show specific messages for a certain context, e.g. if the all of the conditions “entered the central station geo-fence” + “it is a week day” + “time is between 7-9 AM” + “user’s activity is ‘standing still’ since one minute” hold – the conjecture being that the user is likely waiting for a train or bus. A special indoor-version of the app heavily relied on Bluetooth Low Energy (BLE) beacons which were pre-installed in all rooms of the volunteer’s homes to determine their location. While implementing such apps was already challenging back in 2014, mobile OS’s have adapted by now. To conserve power and prolong battery life, most sensors can no longer be continuously queried in the background. Targeting users with some sensors such as location is still possible, but with reduced resolution and increased delay.

Auto AR (2012 – 2013)

AutoAR (German website) is an Augmented Reality system mounted on a car’s roof that visualizes building models which are planned to be erected at a specific location in the real world. Through Virtual Reality goggles, the co-driver sees a real-time visualization of the real world, as recorded by a 360° panoramic camera. This otherwise empty construction site is superimposed with a virtual model of the building. Thanks to centimeter-precision sensors, the viewer gets an accurate, real-time preview of where the building is located and how it fits into the context of the surrounding area. The VR goggles are connected to a laptop workstation that performs image and sensor data processing (including sensor fusion), and records all data streams to disk, for a later replay, back in the office. To use a video see-through approach may seem odd, but it had (and still has) several advantages, such as a much larger FOV (compared to optical see-through AR headsets) and centimeter-precision location data, which even today’s devices cannot deliver. These two videos give you a better idea of the system in action.

Student projects

Master thesis: hand gesture recognition system

From 2011 – 2012 I worked as student at Fraunhofer FIT, before becoming a full-time employee. The last project was my Master thesis, with the topic “Building an appearance-based hand gesture recognition system using the Microsoft Kinect”. I initially analyzed the ability to track hands and individual fingers at large distances (paper), but found that finger-tips (and more generally, hand poses) are hard to identify at distances beyond 1.5 m between hand and sensor, due to limited depth image resolution. I then explored the use of skin-color classification from the high-resolution RGB image, but all attempts showed major deficiencies in real-world environments. The goal to maximize the interaction space beyond 1.5 m with the Kinect turned out to be impossible. I then developed a depth-data-based hand pose training and recognition system. Using a graphical tool, the user can design a set of hand poses (such as victory, fist, palm, etc.), train the system by showing it the poses and finally get a working classification system that can be integrated into any C++ application.

The video illustrates the procedure and also demonstrates a kiosk-application built on top of the classifier. This application named “FIT Xplorer” allows guests to navigate through a catalog of employees and projects of Fraunhofer FIT, using hand gestures. It was designed in a user-centered approach, involving users in requirements elicitation, prototype testing and evaluation of the final prototype.

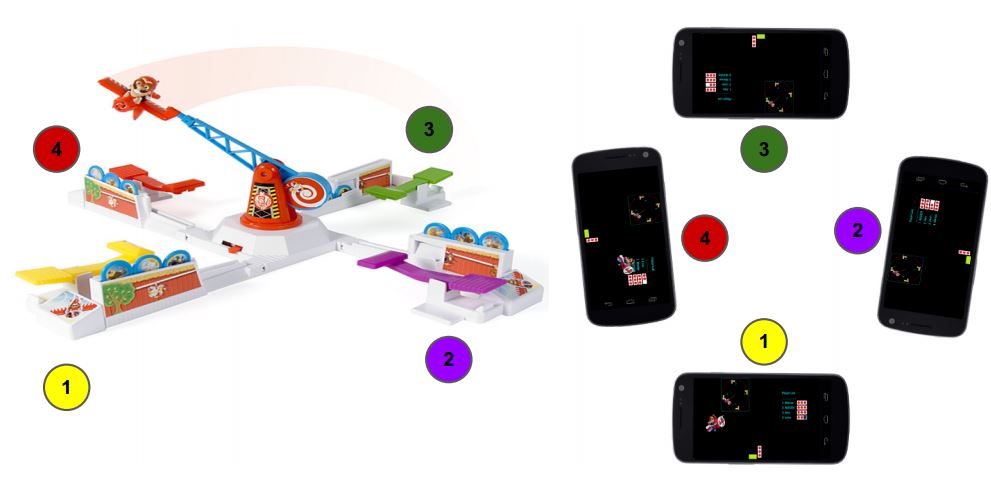

Looping Louie

In 2012 I was part of a team of five students developing an Android-based adaptation of the Looping Louie game, using the SCRUM agile software development framework. Looping Louie, as demonstrated in this YouTube video, is a board game not only played by children, but also a popular drinking game enjoyed by teenagers and adults. In our mobile version, each participant plays with her own device. Pressing the catapult lever is simulated by jerking the phone up towards the ceiling, done precisely when Louie’s plane passes by. Network communication and game advertising was implemented using a client-server architecture over WiFi, using the Alljoyn library. This project provided great learnings in the area of mobile networking, accelerometer-based gesture detection and SCRUM.

Claim your station

In 2011, a peer and I developed Claim your station, a location-based Android game, as part of a Mixed reality games lab. It uses GPS and NFC (Near Field Communication) to detect Touch&Travel touch points which Deutsche Bahn had installed at almost all railway stations throughout Germany at that time. Many stations featured a large number of these touch points, e.g. one per track. In Claim your station, each player is part of a team with a designated color (as seen in the image). The game’s mechanic is similar to “Domination” known from shooter games such as Call Of Duty. You capture the Touch&Travel touch points by holding your phone close to them (within a few centimeters). The team that holds the relative majority of captured points of any given station therefore claims that station. Players are incentivized by two kinds of leader boards. First, players can earn points for themselves by capturing touch points (with bonus points given if the capture also claims the station as a side effect). These points are shown on a players leader board. Second, the teams leader board reflects the team rankings, as any claimed station regularly produces points for the claiming team. The perfect opportunity to play the game were boring waiting periods that result from having to wait for the arrival of the next (connecting) train. Due to being an unfinished prototype, the game was never published on Google Play.

ShadowBall

In 2011 four fellow students and I developed ShadowBall, a mixed-reality PC game using the Kinect for Xbox360. The game was developed as part of a user-oriented design lab, where prototypes are continuously developed and improved, based on user interviews and interactive test sessions.

As illustrated by the video, the game is a full-body experience. The level screen and body silhouette (or shadow) of the user is projected to the wall using a video projector. The game mechanics are similar to those of pinball machines, except that you hit the ball with your arms and legs, which are solid and therefore reflect the ball. By hitting the ball at the right angle the goal is to place it in a target area. There are different kinds of targets and balls, some of which are incompatible with each other. We developed a jungle-based theme where balls are represented by fruits or meat, and target areas are carnivores or herbivores respectively, to also make the game interesting for children. The game was developed in C# using Microsoft’s XNA framework. My tasks included devising the overall software architecture and implementing physics and rendering.

Game development & Machinima

Between 2008 and 2010 I contributed to Nexuiz, a free, open-source First Person Shooter (FPS) game. Nexuiz is similar to commercial games like Unreal Tournament or Quake. Its scene was most active between its launch in 2005 and early 2011. At that time Nexuiz’s development team created a commercial spin-off game also named Nexuiz. This move was not well received by the greater part of the community and many developers, who insisted on the open-source idea of the game. They created a fork of Nexuiz called Xonotic. Although the forum’s slipping activity indicates that its community is shrinking since mid 2013, it is nevertheless an entertaining, free FPS game.

My core contribution to Nexuiz was in the area of competitive gaming. With the help of other community members, I hosted several online tournaments in Europe and developed my first real software project: a PHP-based ladder website where players challenge others in different game modes. I also contributed several game features (using QuakeC), such as a warm-up mode where people would ready up to begin the game, and the ability to call time-outs.

In 2009 I hosted a video podcast called Nexuiz In-Depth (see this YouTube channel). In about 10 episodes I explained aspects of the in-game movement as well as the usage of many hidden commands that I discovered while doing programming work on the Nexuiz code base. Yet another contribution was the Nexuiz Demo Recorder. This Java GUI application automates mass-recording videos from Nexuiz or Xonotic. These games allow players to record their game sessions in a compressed format called “demo”, which does not contain video, but is just a dump of sent and received network packets that include in-game events, such as player locations and fired shots. The game engine allows to replay these demo files at arbitrary speeds while dumping the screen buffer to a video file. However, using this feature is cumbersome, because the recording process has to be started and stopped manually, which involves a lot of waiting time. With the Nexuiz Demo Recorder users would specify several recording jobs in advance. The tool would then launch the game and batch-process all jobs over night, saving machinima movie makers time that they could instead use to make machinima or “frag movies”, which demonstrate the skill of individual players or teams.