This article describes an experiment where I apply acoustic fingerprinting and speech recognition to detect duplicates in my personal collection of episodes (mp3 files) of the German radio play “Philip Maloney”. I discuss the advantages and disadvantages of both approaches, and present the results of applying the Olaf acoustic fingerprinting implementation.

Introduction

Acoustic fingerprinting (Wikipedia) is a technique which lets you identify a piece of audio in a large database of audio files. The technology powers popular services such as Shazam or Soundhound, where your smartphone only needs to listen to a short segment of an audio clip and their service identifies it among millions of pre-indexed songs.

I have always wanted to play with this technology in a small hobby project. A few weeks ago, I found a great use case: determine whether the authors of a radio play (or the radio program managers) are airing the same episodes, using different titles, proclaiming that these episodes are new, even though they are not!

The play I’m referring to is the Swiss-German radio play “Die haarsträubenden Fälle des Philip Maloney” (website). I’m an avid listener and fan of that play, in which a private investigator has to solve murder mystery cases. The author, Roger Graf, has managed to make it very entertaining, thanks to absurdly weird characters and plot lines. Listening to the 15-25 minutes long episodes is a fun (rather than nail-biting) experience!

Since there are only about 400 distinct episodes (see here and Wikipedia), but the play has been on air since over 30 years (German source) with weekly broadcasts, there are roughly 30 (years) * 52 (weeks/year) = 1560 broadcasts, but much fewer episodes. Thus, the chance that an episode is truly new is ca. 25%. From listening to my private Maloney archive, which is a mixture of audio CDs and YouTube captures, I often had the subjective impression that I’d recognize an episode which I had just listened to a few days ago. Since my audio player app marks episodes I listened to as “done”, it should not happen that I listen to the exact same episode file twice. This caused me to investigate whether there are actually duplicates, where the content of the play is the same, but the episode titles are different.

Approaches to detect duplicates

There are two basic approaches that come to mind when you want to detect duplicates in audio recordings: speech recognition (ASR) and acoustic fingerprinting.

Speech recognition technology has had a long history. Nowadays, machine learning approaches are common, but there are many different other kinds of algorithms solving this problem. Essentially, an ASR algorithm converts an audio stream (or file) into a textual transcript of the spoken words. Detecting duplicates of the Maloney episodes can be implemented by applying ASR to an episode’s audio file, and comparing the resulting transcript with the transcript of all other, already transcribed episodes, whose texts are stored in a database. The comparison needs to be fuzzy (e.g. using the edit distance) to increase robustness. To understand robustness, imagine recording #1 of an episode had lower volume levels than recording #2. This could cause the ASR algorithm to miss a few words in recording #1 (which it did not miss in recording #2), and the transcript content would not match verbatim.

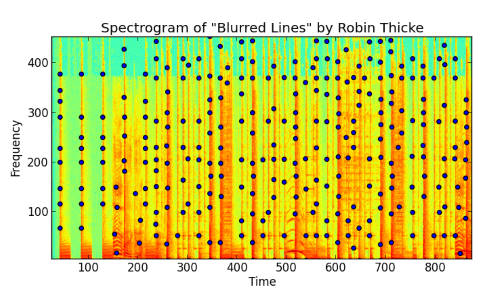

Acoustic fingerprinting is a different approach with much lower computational complexity than speech recognition. The algorithm splits an audio file into many, very short segments and produces a characteristic fingerprint (a set of mathematical features) for each segment. The features are based on a frequency spectrum analysis, and are chosen such that they are robust against distortions, such as varying loudness levels or other noise that is part of the segment. Thus, fingerprints of two recordings will still match, even though one of them contained noise, or has a lower volume. To detect Maloney episode duplicates, you would select a short clip (e.g. 30 seconds long) of an episode, compute its fingerprints (of the even shorter segments, each a few seconds long), and match them against a database of the fingerprints of all other episodes.

Each approach has advantages and disadvantages:

| Speech recognition | Acoustic fingerprinting |

|---|---|

| 👍 Recognition of similar content is robust against different encodings (loss due to file format compression, varying sampling frequency, …), noise, and even different speakers | 👍 Recognition of similar content is robust against different encodings and noise 👍 Performance (up to 1000x real-time) 👍 Recognition accuracy high even for segments that contain both speech and background music 👍 System set up and complexity is lower than for ASR systems |

| 👎 Very compute-intensive: without GPU, it is slower than real-time playback speed! 👎 German ASR system is difficult to set up 👎 Recognition accuracy negatively impacted when there is both speech and background music | 👎 Recognizes duplicates only for two equal recordings (i.e., would not detect when both episodes have the same script but different speakers). |

This comparison makes it obvious that acoustic fingerprinting is the preferred solution. Nevertheless, I attempted both approaches, starting with speech recognition.

Experimentation and library selection

Speech recognition

My personal impression with getting speech recognition to work is that you either have no technical expertise – and thus you pay someone to do it for you – or you have deep technical expertise, so you set up your own system.

The first approach (pay for use) would have been easy: just select a speech recognition API, e.g. the one by Google or Microsoft, and pay for the transcription. However, I did not feel like spending dough on a hobby project. I could have just used a free tier (e.g. the one Microsoft offers), but then I could have only indexed about 30 seconds of each episode, to build my database of transcripts, to stay within the free quota. When matching unknown episodes, there is considerable risk of false negatives, because of timing offsets. For instance, if there are two files which contain the same episode, but file #1 starts with the intro music (ca. 25 seconds long) and file #2 does not, then this approach would fail to detect the duplicate.

I then attempted to build my local, German-capable ASR system. Since I’m not an expert, I failed miserably. There are many tutorials and link collections (see e.g. here) that explain how to train speech models for a specific language, but they are meant for experts in the field. I managed to set up DeepSpeech with a German pre-trained model. DeepSpeech transcribed test utterances I produced myself with OK-ish quality. But when I applied it to Maloney episode, the output was complete rubbish. A possible cause might be the mixture of speech and background music, which may have confused DeepSpeech. The fact that transcription speed was ~0.5x real-time, I did not continue with this approach any further.

Acoustic fingerprinting

When searching for an audio fingerprinting library, I was aiming for a solution that is easy to use and deploy. The first obvious results a search engine will give you are the following libraries. I discarded them, because they have a high deployment complexity (multiple components in a client/server configuration):

- Dejavu: Python-based client which requires a MySQL server for storing fingerprints. The README states that matching duration is approx. 3x real-time.

- AcoustID: a commercial fingerprinting database, which is free for open source projects. Requires the chromaprint C library to create fingerprints, for which there are high-level language bindings, such as pyacoustid. However, I did not want to put fingerprints of the Maloney episodes into a public database, nor host the AcoustID database service myself, which is not officially supported anyway.

- Soundfingerprinting: a C# library which requires the installation of a separate, commercial database called Emy, for which a free community edition is available.

Digging further, I found the Olaf C library, which addressed all my needs. It comes with a command line interface, so I can interface with it from any programming language. The C library embeds the light-weight LMDB key-value data store, which eliminates the need to deploy a separate database server. Finally, Olaf’s processing speed is ridiculously fast, ranging from 500x to 1200x real-time, making it two orders of magnitude faster than Dejavu.

Implementation

I implemented a proof of concept Python application which fetches Maloney episodes from YouTube and the SRF 3 radio station, and attempts to detect duplicates using Olaf. The code is available on GitHub here, including instructions on how to get it to work. Internally, my tool uses youtube-dl to fetch episodes from YouTube. I discussed that tool in this article in more detail.

Please note that my implementation does not cover all episodes that exist (as listed here). The code only checks those episodes from the 115 released CD albums (retrieved from YouTube), and the recently aired episodes publicly available from SRF 3. The radio station only makes the episodes of the last 12 months available, for legal reasons. There are numerous episodes (starting from episode #365, “Die Stimme des Täters”) which are not covered by the CDs yet. They were aired in 2019 or earlier.

Results

The only duplicate I found was for episode #233 “Die Sprengmeister” which was aired with exactly that title, but published on CD with a slightly different title, “Der Sprengmeister” (with a separate intro where the announcer actually utters that title). I also found that there are a few episodes whose title look very similar, but the content is entirely different, e.g. “Die Verfolger” vs. “Die Verfolgte”, or “Das andere Leben” vs. “Das doppelte Leben”.

My tool did find a few other “duplicates”, which were however technicalities, caused by the downloading process. They would occur when titles differ between the YouTube and SRF 3 source, as the following examples illustrate:

- Das Geschäftsessen vs. Das Geschaftsessen (incorrect umlaut)

- Das Kaffekränzchen vs. Das Kaffeekränzchen (incorrect spelling by the uploader)

- Der schöne Fuß vs. Der schöne Fuss (Swiss-German way vs. German way of spelling eszett)

Conclusion

With the help of modern algorithms, I was able to explore a hypothesis which I built based on subjective impressions. This experiment was very interesting, because it proved that my hypothesis is false, in some sense. The authors of the radio play did not cheat. But my subjective memory, which told me that I was listening to episodes twice, was not wrong either. The algorithms determined that my collection was to blame, because it contained duplicated episodes with very similar file names, which only differ in minor spelling differences.

Even though the experiment’s hypothesis turned out to be false, this project helped me become more familiar with technologies. Nothing beats having a concrete use case to facilitate learning and applying new technologies. Let me know in the comments if you applied speech recognition, acoustic fingerprinting or similar technologies and learned something from it!

Hello! Is the search algorithm resistant to changes in speed?

I have no idea.